DynamoDB + SQS - The Ultimate Guide

Written by Charlie Fish

Published on September 25th, 2022

Time to 10x your DynamoDB productivity with Dynobase [learn more]

Table of Contents

SQS vs DynamoDB - Understand The Difference

Amazon SQS and DynamoDB are very different technologies for very different use cases. Although completely different technologies, they can be seamlessly integrated to increase capacity, improve performance, reduce costs, separate workloads, modify data, and much much more. This is truly where the power of an integrated & managed platform like Amazon Web Services shines. You don't need to worry about scaling individual servers or managing the infrastructure. Yet, you can combine two powerful technologies to create a tightly integrated scalable solution to fit your needs. Let's discuss what each technology is before diving into how they can be used together.

What is Amazon SQS?

Amazon Simple Queue Service (SQS) is an extremely scalable queue service. You can post messages to your queue that can then be read and processed by another service (ex. Lambda, EC2, offsite via AWS SDK, and more).

Since SQS is a queue service, it is not meant to act as a database. It is a temporary (short-term) storage location for messages that need to be processed. You can not retrieve messages directly, and you can not query or search for messages within the queue. You can only add, request another message (in whatever order SQS decides), and delete messages from the queue.

For messages can be text up to 256KB and can be retained within the queue for up to 14 days. Once messages are sent, they can not be modified.

Some common use cases for SQS include:

- Request Offloading: Operations that are slow or take a long time can be sent to an SQS queue for asynchronous processing off of the main interactive path onto a different service whose sole job is handling asynchronous background tasks.

- Scalability: Due to the scalable nature of SQS, you can easily add more messages to a queue. As your queue grows in size, you can use EC2 Auto Scaling or Lambda to easily scale your processing capacity to meet the demand of your queue without any concerns of synchronous bottlenecks.

- Resiliency: Because queues are decoupled from your application itself, application or system failures/downtime will not impact the queue. Messages will remain in the queue, and once your system is back online, you can continue to process the messages from the queue. This decouples the reading/processing action from the writing action, allowing for a more resilient system.

- Fanout: You can use SQS to fanout messages to multiple consumers. For example, you might want to send the user an email when a payment is successful, as well as upload the customer's receipt to S3, and maybe also send a Slack message to your company's internal chat room to let everyone know a payment was successful. SQS works great for this use case.

- And Many More: The possibilities of what you can do with SQS are endless. You can use SQS to decouple your application, create a scalable system, or even use it as a message bus to connect multiple applications together.

Now let's talk about the two types of queues (Standard & FIFO) that SQS offers:

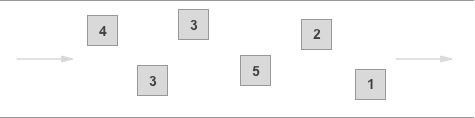

Standard Queues

Standard queues include the following unique features:

- Lower cost: As of posting this guide, standard queues are more cost-effective than FIFO queues in the

us-east-1region. - Unlimited throughput: Standard queues support nearly unlimited throughput, allowing you to process as many messages as you want.

- At-Least-Once Delivery: Each message will be guaranteed to be delivered at least once. However, it is possible that a message may be delivered more than once. In practice, this is extremely rare, even at scale, but it is important to account for in your use case.

- Best-Effort Ordering: Sometimes messages might be delivered in a different order than they were received in. Once again, this is rare, but it is important to understand and account for.

FIFO Queues

FIFO queues include the following unique features:

- High Throughput: By default, FIFO queues support up to 300 messages per second (up to 3,000 per second if you batch messages). You can enable high throughput mode, which will increase this to 3,000 messages per second (and 30,000 if you batch messages).

- Exactly-Once Processing: Each message is delivered once and remains available until you process and delete it. Duplicates are not possible with FIFO queues.

- First-In-First-Out Delivery: Messages are guaranteed to be delivered in the same order in which they were added to the queue.

What is Amazon DynamoDB?

Unlike SQS, DynamoDB is a NoSQL database service. Items stored in DynamoDB can be queried, directly retrieved, updated, and persisted. While DynamoDB has the potential to act as a queue, SQS is a much better fit for this use case. DynamoDB also has larger storage limits and can sometimes be more expensive than SQS.

It is important to understand that DynamoDB and SQS are not considered alternatives to one another. They are two completely different technologies for different use cases. Although you can use DynamoDB to create a queue-like system (similar to SQS), this is not what it was designed for, and thinking about it as an alternative to SQS is an anti-pattern.

It is highly suggested that you read the guide on What Is DynamoDB? Ultimate Introduction for Beginners for more information about what DynamoDB is.

What are Amazon DynamoDB Streams?

DynamoDB Streams are a technology built into DynamoDB that lets you subscribe to changes made to your DynamoDB table. This technology is a great way to add messages to an SQS queue when a change is made to your DynamoDB table. You can then have a processor on the SQS to further handle the DynamoDB change (e.g., send an email, clear a cache, etc).

DynamoDB Streams are the primary way we will work with SQS in this guide. However, we will also use the AWS SDK directly to add/modify/delete data from our DynamoDB table based on SQS messages.

The Dynobase guide on DynamoDB Streams - The Ultimate Guide (w/ Examples) is a great resource for learning more about DynamoDB Streams and how to get started with them.

DynamoDB Streams & SQS - What You Need To Know

There are two directions to work with DynamoDB and SQS. Either consuming messages from SQS and processing them into DynamoDB. Or, adding messages to SQS when a change is made to your DynamoDB table.

We will be covering both of these directions in this guide. Both strategies will utilize Lambda functions to add messages to the SQS queue and process items from the SQS queue.

Although we will be using Lambda functions, it is possible to use systems like on-premise servers, EC2 instances, or more to handle the processing of messages from SQS.

DynamoDB Streams to SQS - Step-by-Step Guide

To start, we will be using DynamoDB Streams to add messages to SQS based on changes made to your DynamoDB table.

You can then set up a consumer on the SQS queue to further process the DynamoDB change. We won't be covering this part in much detail as it is very application and use case dependant.

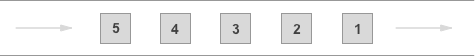

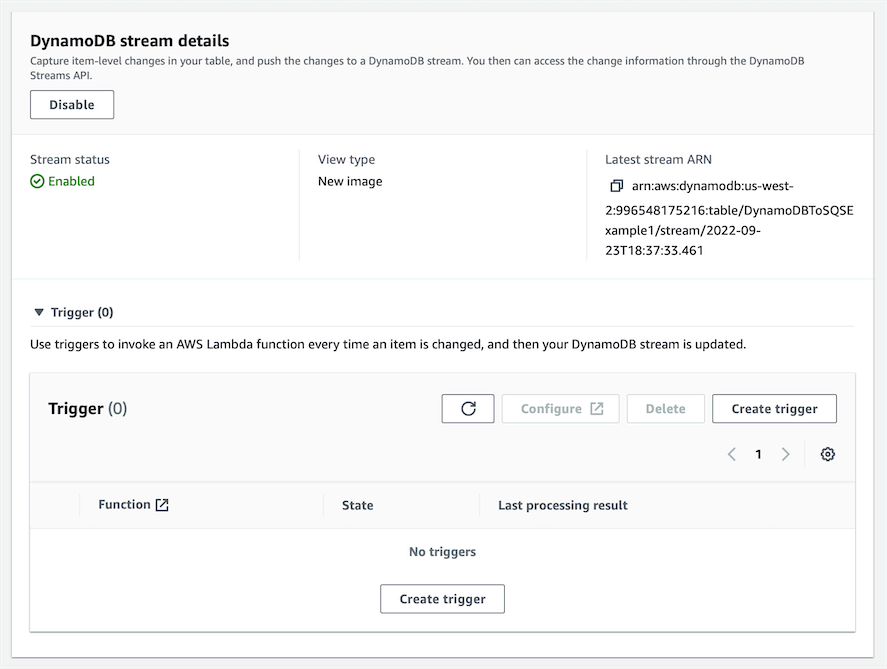

Step 1: Enable DynamoDB Streams on Your Table

To start, you will need to enable DynamoDB Streams on your DynamoDB table. This will allow DynamoDB to send messages to SQS when a change is made to your table.

Once you have located your DynamoDB Table in the AWS Console, navigate to the Exports and streams tab.

At the bottom of this page, there is a section called DynamoDB stream details with an Enable button. Click this to set up the DynamoDB Stream on your table.

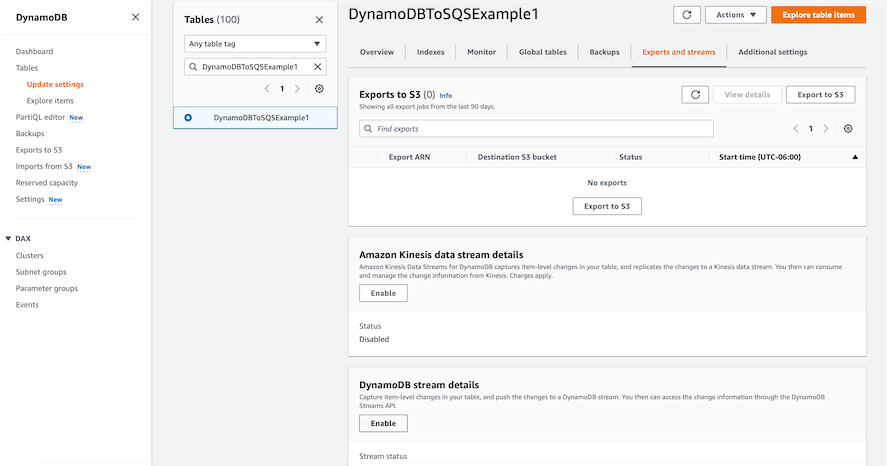

This will bring up a page asking which view type you would like to use for the Stream.

Select one of the View types, then click Enable stream:

- Key attributes only: Only the key attributes of the changed item.

- New image: The entire item as it appears after it was changed.

- Old image: The entire item as it appears before it was changed.

- New and old images: Both the new and old images of the changed item.

For now, I will choose New image. However, this option truly depends on your use case and how you want to process changes from your DynamoDB table.

That is it for enabling DynamoDB Streams on your table. In a future step, we will be connecting the Lambda function to the DynamoDB Stream.

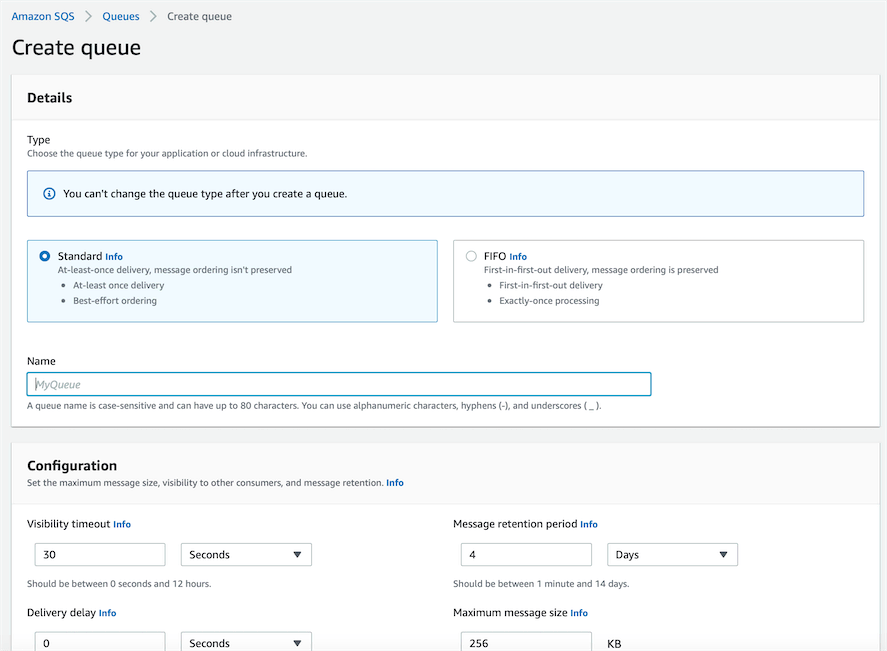

Step 2: Create SQS Queue

Next, you will need to create an SQS queue to send messages to from our DynamoDB Stream.

Within the AWS Console, there is a wizard for creating a new SQS queue.

We won't be going over all of the options in the wizard. But a couple of important settings to note are:

- At the top of the page, you can select the type of queue you would like to create. You can read more about the types of queues in the

What is Amazon SQS?section above. - The configuration options allow you to change things like retention period, maximum message size, and more.

Step 3: Create Lambda Connector Function (With Sample Code)

Finally, we need to create a Lambda function that will be triggered by our DynamoDB Stream and add messages to our SQS queue for further processing.

Within the Lambda function I created, I added the following JavaScript/Node.js code:

This will add a message to the SQS queue with the NewImage of the DynamoDB change. This is the same as the New image View type we selected in Step 1.

Remember, you can write additional JavaScript code to further modify the message before it is added to the SQS queue. However, to separate concerns, it is recommended to do intensive processing on the SQS consumer.

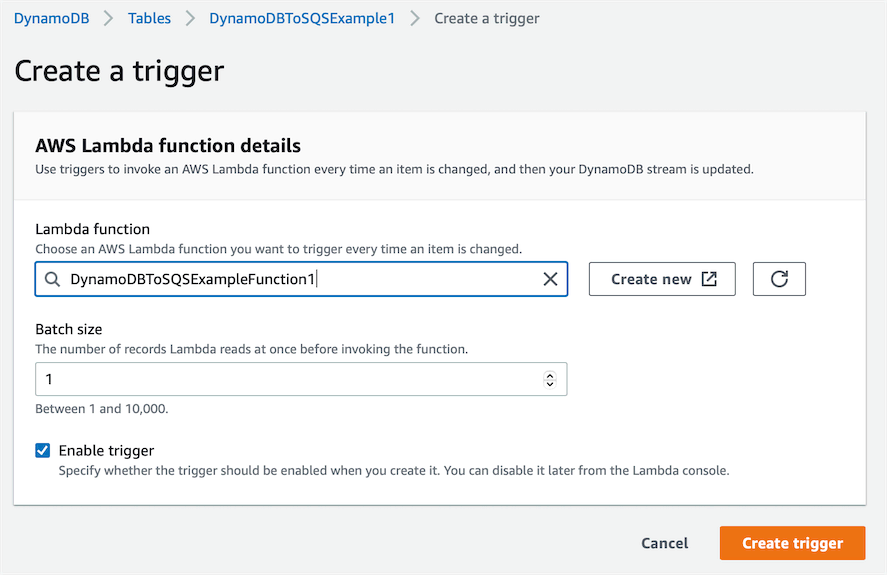

Step 4: Connect Lambda Function to DynamoDB Stream

Now that we have our Lambda function and SQS queue setup, we need to connect the Lambda function to the DynamoDB Stream.

You can do this by adding the Lambda function as a trigger to the DynamoDB Stream.

Click on the Create trigger button to set up the trigger.

Then simply click Create trigger to finish the setup.

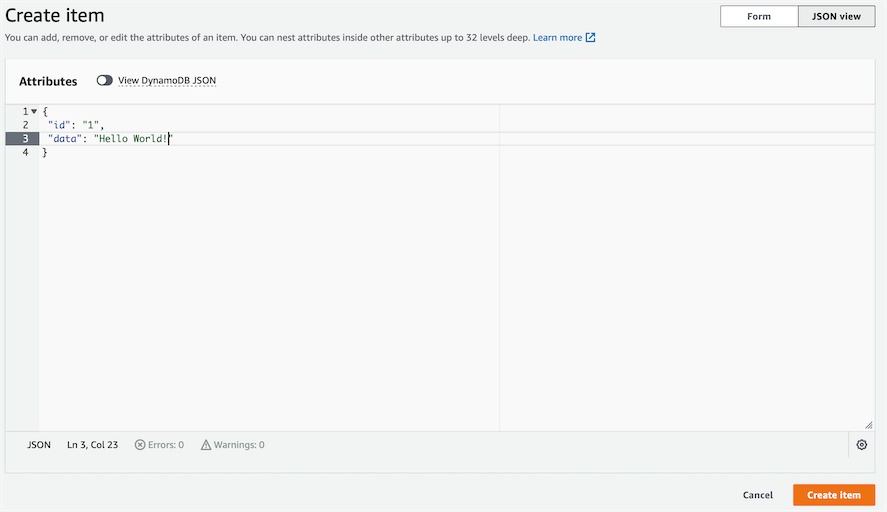

Testing

Now let's try it out in action and see how it works.

I'm going to create an item in my DynamoDB table.

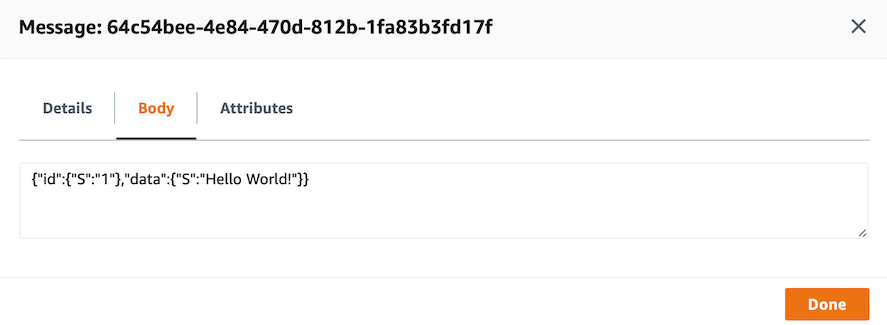

Then I will check the SQS queue to see if a message was added.

As you can see the item successfully made it from DynamoDB to our Lambda Connector Function to SQS, which can then be used by a consumer to further handle the change.

SQS to DynamoDB - Step-by-Step Guide

Now let's talk about how to add items to DynamoDB from your SQS queue.

Step 1: Create resources

Just like above, we need to create a DynamoDB table, SQS queue, and Lambda function.

Step 2: Write Lambda Code (With Sample Code)

If we put the following code into our Lambda function, this will take the event and put an item into our DynamoDB Table for that item.

Step 3: Add Lambda SQS Trigger

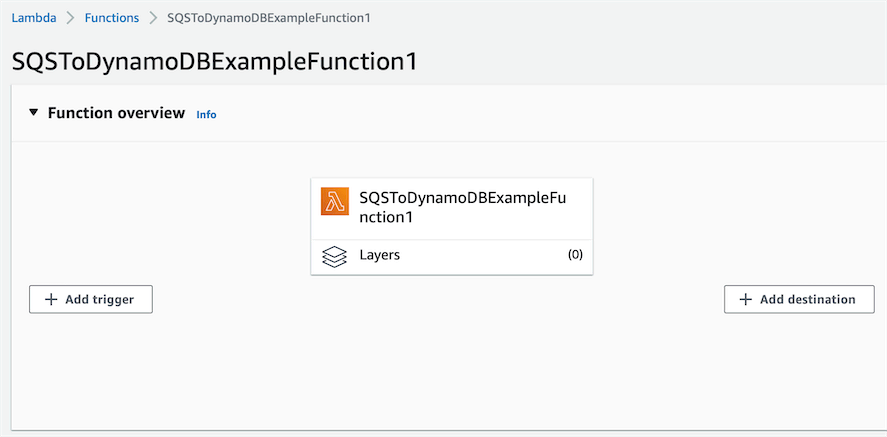

In our Lambda function, there is an Add trigger button that you can click to add a trigger.

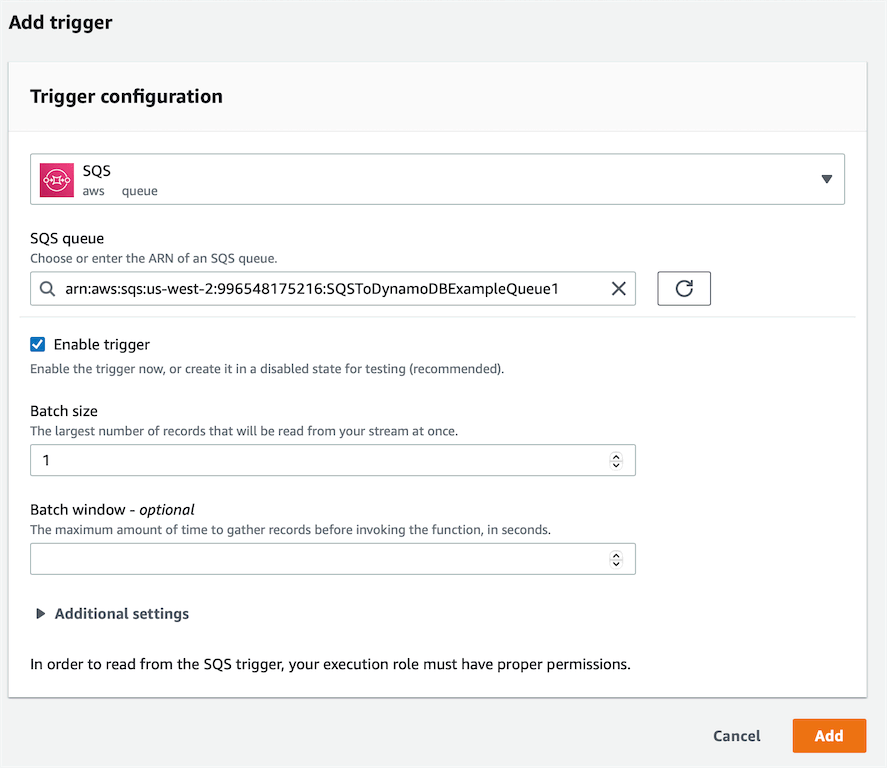

Then you can fill out the add trigger page with the SQS queue settings.

Testing

Now we can test this integration.

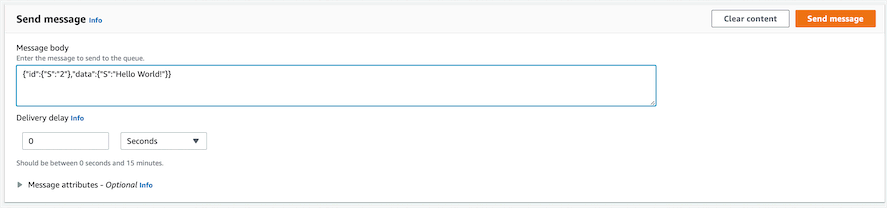

First, I'm going to add an item to the SQS queue.

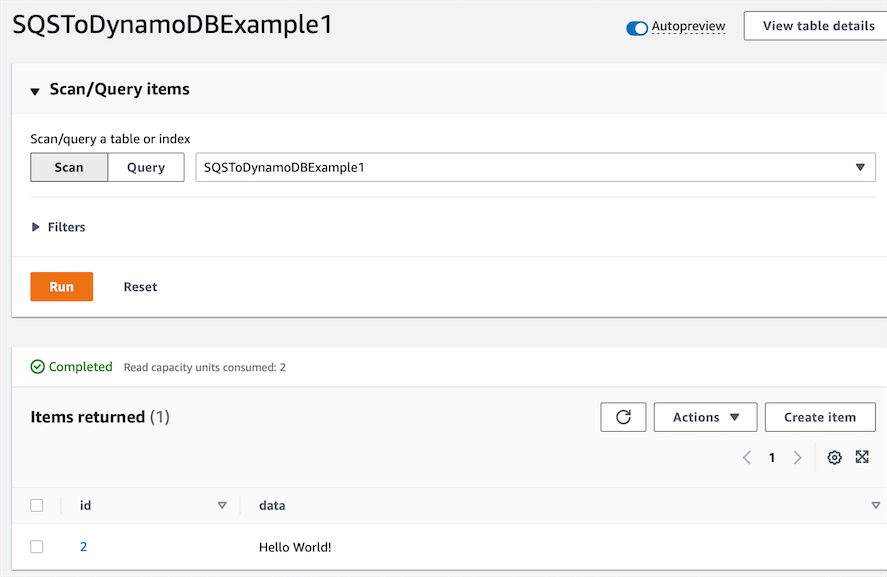

Then let's look in our DynamoDB Table to see if the item was added.

And it was!

Frequently Asked Questions

Can DynamoDB stream to SQS?

Yes. DynamoDB Streams can be used to stream changes to an SQS queue with a Lambda connector function.

Can a DynamoDB table have multiple streams?

Yes. You can add multiple triggers to a DynamoDB Stream.

Can I transform an SQS message before storing it in DynamoDB?

Yes. Since we use a Lambda function to write from SQS to DynamoDB, you can easily write code in your Lambda function to transform the SQS message before writing it to DynamoDB.

Can SQS be used as a DynamoDB cache?

Because SQS is a queue, you do not have the ability to pull a specific item off the queue. This means it is not suited to be a DynamoDB cache. DynamoDB DAX is a great solution for an in memory DynamoDB cache.

Can SQS help reduce my DynamoDB table consumed capacity?

Yes. While SQS can't really help reduce demand on reads from your database, it can be used to spread out write demand over time and handle sudden write spikes to your DynamoDB table. This won't reduce the end result write demand on your table but can help you smooth your consumed capacity over time.